If you were to draft the ideal development scenario for a formal learning game, the Colorado makers of Woot Math may be a good place to start.

The company has used a mix of funding sources – from outside investors to the federal government – to create a game that teaches and assesses student abilities in math.

The project received support from the National Science Foundation’s Small Business Innovation Research (SBIR) grant that backed some serious research into the game and its personalized learning efforts.

In writing about why his venture capital group decided to support Woot Math earlier this year, the Foundry Group’s Jason Mendelson wrote, “Woot Math is unique in that it personalizes and adapts to each student and fully supports in-class out out-of-class sessions. It also provides feedback to the teacher to help them optimize their lesson plans and knowledge about particular students.”

And that is the central thing about Woot Math. It has been built around the idea of personalized learning – that students learn math in different ways and at different speeds and tools should be able to adapt to them as individuals.

It is a core part of their middle school product and when they developed it, CEO Krista Marks said they knew they wanted it to be scientifically backed up.

It’s funny, one of the major claims about games as an educational tool is that they are responsive to the player and so games grow more difficult or reiterate a skill a student is struggling with and that this personally tailored approach must be beneficial.

But when the Woot Math team went through the research they found very few people had actually tested the idea.

She told us, “the market just accepted the idea because it seems almost self-evident that technology can adapt and personalize to each student and improve outcomes as a result. But the evidence hasn’t lined up, and there may now even be a backlash because of that.”

We had some questions for Marks and her team and asked what other developers can learn from their work and the following is our Q&A.

gamesandlearning.org: You note the real lack of empirical evidence when it comes to backing up claims that personalized learning actually helps a student. Were you surprised by how few studies have been done to test this idea? Any thoughts on why there have not been more?

Krista Marks: Initially, we expected to find empirical evidence around what personalized learning techniques worked best we were looking to adopt best practices and we were surprised when we didn’t find clear evidence. What we found instead were several recent surveys that called out a general lack of evidence that personalized learning technology actually helps students.

We believe that the market just accepted the idea because it seems almost self-evident that technology can adapt and personalize to each student and improve outcomes as a result. But the evidence hasn’t lined up, and there may now even be a backlash because of that; see for example the Nov. 2014 National Education Policy Center report, “New Interest, Old Rhetoric, Limited Results, and the Need for a New Direction for Computer Mediated Learning.”

We think another reason why there haven’t been more studies is that it is extremely challenging to do rigorous research in education, especially in authentic classroom settings. The New York Times has quoted F. Joseph Merlino (President of the 21st Century Partnership for STEM Education) on this topic, saying: “It is an order of magnitude more complicated to do clinical trials in education than in medicine.”

gamesandlearning.org: Given the lack of other studies¨ how did you go about structuring an experiment that would really test this idea?

Krista Marks: We tested two versions of Woot Math, head-to-head, in a randomized controlled trial (RCT).

In the trial, the only difference being tested was that the experimental adaptive capabilities were enabled for the test group but disabled for the control group. Because it was an experimental study, any measured benefits can only be attributed to the adaptive technology for personalized learning. That is the power of an RCT; you know exactly what you are measuring so long as you have a large enough sample to establish statistical significance.

We also conducted the experiment in real, uncontrolled classrooms. I.e., the product was used by teachers with their students just like they might use any other software. We ran the trial in multiple grades and at multiple sites with differing demographics (urban, suburban, etc.). That allows us to be confident that the results are reproducible and generalizable.

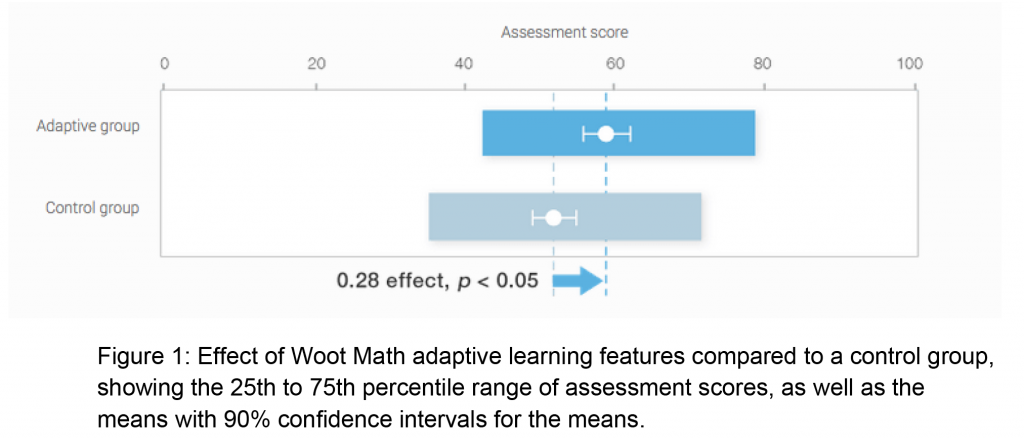

The study results show statistically significant evidence that the adaptive version improved learning and retention, with moderate to large effect sizes (g = 0.23 to g = 1.50, p < .05) in a sample of 350 students in grades 36. (The study was conducted during the last six weeks of the school year, so the participating students had nearly completed the grade for which their results are reported.)

The two questions that our study was intended to answer were the following:

● Is there evidence that an adaptive version delivers better learning and retention?

● Is there evidence that the adaptive version either positively impacts student sentiment or leaves it unchanged?

The study implemented a parallel group randomized controlled trial at four demographically diverse sites, and was designed to meet the What Works Clearinghouse standards for scientific evidence for what works in education. Student participants were randomized into the test and control groups in equal numbers, and the trial was fully blinded – students, teachers, and investigators were not aware as to which group each student participant had been assigned.

Both groups (test and control) completed a treatment intervention using Woot Math, with the only variation being the use of Woot Math’s adaptive capabilities. Students in the test group were given adaptive instructional sequences and the control group nonadaptive sequences (i.e., as linearized by content experts). By isolating the effects of Woot Math’s adaptive features, we were able to answer the research questions posed above.

At the end of the trial, participating students were given a summative assessment on the material covered (ordering and equivalence of fractions). The summative assessment was designed by the research team and is described in detail in the White Paper . The majority of assessment tasks (64%) required students to construct a response – e.g., drag a fraction to the correct location on a number line, model a fraction using a bar model, etc. Considering the grade level of participating students, the reasoning required to successfully respond to these tasks was not trivial.

gamesandlearning.org: What did your experiments find and what are your thoughts on the potential for personalized learning?

Krista Marks: We found clear evidence that the students in the experimental group had improved learning and retention. I.e., we found that the personalized learning features resulted in real and measurable benefits. Evidence of this sort is considered the “gold standard” for establishing cause and effect. The bottom-line statistical result showed an effect size of g =+0.28 ( p =0.015).

Given the nature of what we were testing (a small feature set) and the amount of usage tested (students used the software for an average of 3 hours), that is an impressive effect size. To put it in perspective: many studies have looked at the effect of giving homework versus not giving homework and on average they have found an effect size of g =+0.29 per year.

Here’s a graphical representation from our white paper:

It’s a very promising result and indicates definite potential for personalized learning. However, this is only one study, and it is important not to overly generalize. Here’s what we say in our white paper:

“Our study examined the effects of personalized with respect to a small, well-defined topic: ordering and equivalence of fractions, a topic that has long been a challenge for students to master and has been the subject of much research.

Questions about the generalizability of the results from this study include the following, which could be addressed through follow-on research:

- Do the results generalize to other topics within mathematics, other grade levels, or other subjects?

- Are the results dependent on the style of online instruction and theory of learning used by Woot Math?

- To what extent are the results dependent on the observed levels of student engagement?

- To what extent are the results dependent on context and conditions of use? In particular, what is the impact of longer-term treatment and how well are effect sizes maintained when measured sometime after the treatment is completed?”

The adaptive techniques studied should certainly be expected to generalize to other topics, subjects, and grades, as nothing specifically limits them to the contexts chosen for this study.

That said, it is certainly the case that the application of those techniques for this study benefited from the measurability of mathematical tasks and from the extensive pedagogical research on the ordering and equivalence of fractions. One noteworthy hypothesis is that adaptive learning software may amplify both strengths and weaknesses of underlying instructional approach, and that in the case of this study, the adaptive effect represents the magnification of a strong underlying effect derived from decades of research on teaching and learning rational number.”

gamesandlearning.org: How easily do you think other developers can implement actually scientifically tested personalized learning into their games?

Krista Marks: Running a randomized controlled trial is hard.

However, we think developers can learn a lot from running informal experiments, testing features like this with small groups of students and trying to get a feel for what seems to be working or not working so well. We did this on a weekly basis over many weeks before running our RCT and felt like we were able to hone in on some productive personalized learning strategies. We would recommend this practice to all developers. We have published details on the strategies that seemed to work for us.

gamesandlearning.org: Did the learning science aspect of this project make it harder to develop an engaging game? In short, did you have to sacrifice a lot of the fun to make it effective?

Krista Marks: We think the opposite is true. We think these aspects improved the gameplay, and we also tried to measure this in our RCT. The differences weren’t big enough to be statistically significant (between control and experimental groups), but trended towards the positive. It seems probable to us that personalized learning, done well, should improve the experience.

Game designers work hard to get their games to feel right in terms of being challenging but not too hard. It’s no different when designing for learning, though maybe dialed slightly harder (toward “productive struggle”) than a pure entertainment game would be.